Profile likelihood

To perform a basic profile likelihood analysis of a fit obtained, basico implements the Schaber method that in turn fixes each optimization parameter, while reoptimizing the remaining parameters. This scan is performed in both directions. Ideally, what we would want to see for identified parameters, is that the result gets worse, as we go away from the found solution. If we find a flat line, that means the parameter is not identifiable, if we find a curve that is flat on one side, we can only identify the corresponding bound.

Since this could potentially take quite some time basico breaks this task into three steps:

generating different copasi models for each of the scans. (these files could then be moved to a cluster environment for parallel computation)

running the the scans (here basico uses the python mulitiprocessing library), which places reports into a specified directory

plotting the result, by specifying the directory where the reports have been stashed.

Let’s try this on an example model.

[1]:

from basico import *

from basico.task_profile_likelihood import prepare_files, process_dir, plot_data

Preparing files

The first step is to prepare the files, here we load an example model, run the parameter estimation

[2]:

example_model = load_example('LM')

run_parameter_estimation(method=PE.HOOKE_JEEVES, update_model=True)

[2]:

| lower | upper | sol | affected | |

|---|---|---|---|---|

| name | ||||

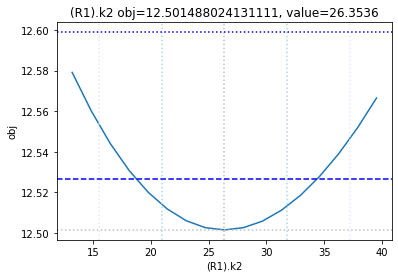

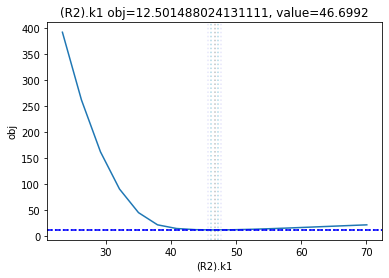

| (R1).k2 | 1e-6 | 1e6 | 26.353601 | [] |

| (R2).k1 | 1e-6 | 1e6 | 46.699200 | [] |

| Values[offset] | -0.2 | 0.4 | 0.046461 | [Experiment_1] |

| Values[offset] | -0.2 | 0.4 | 0.077161 | [Experiment_3] |

| Values[offset] | -0.2 | 0.4 | -0.049828 | [Experiment] |

| Values[offset] | -0.2 | 0.4 | 0.030610 | [Experiment_4] |

| Values[offset] | -0.2 | 0.4 | 0.058074 | [Experiment_2] |

at this point we also want to make sure, that we have reached a good fit, so we look at the fit statistic:

[3]:

get_fit_statistic()

[3]:

{'obj': 12.501488024131111,

'rms': 0.15812329381929224,

'sd': 0.15924191621103437,

'f_evals': 3008,

'failed_evals_exception': 0,

'failed_evals_nan': 0,

'cpu_time': 5.0625,

'data_points': 500,

'valid_data_points': 500,

'evals_per_sec': 0.0016830119680851063}

now we create a temporary directory, and save the file and experimental data into it. After that i unload the model.

[4]:

from tempfile import mkdtemp

data_dir = mkdtemp()

original_model = os.path.join(data_dir, 'original.cps')

save_model(original_model)

remove_datamodel(example_model)

the code has just been ported from an earlier version, so lets debug it for now

[5]:

#import logging

#logging.basicConfig()

#logging.getLogger().setLevel(logging.DEBUG)

the data will be stored in those files:

[6]:

#print(data_dir)

#print(original_model)

now we go ahead and prepare all the files, this is done by the prepare_files function that accepts the following arguments:

filename: the template file with the fit we want to analyzedata_dir: the directory in which to store the files in

the remaining parameters are optional:

iterations=50: if not specified hooks & Jeeves / Levenberg Marquardt will perform50iterationsscan_interval=40: if not specified 40 scan intervals will be taken (40 in each direction!)lower_adjustment='-50%': the multiplier for the lower bound of the scan. By default the interval will be -50% of the found parmeter value.upper_adjustment='+50%': the multiplier for the upper bound of the scan, defaults to ‘+50%’ foundmodulation=0.01: modulation parameter for Levenberg Marquardttolerance=1e-06: tolerance for the optimization methoddisable_plots=True: if true, other plots in the file will be removeddisable_tasks=True: if true, other tasks in the file will be deleteduse_hooke=False: if true, use hooks & jeaves otherwise Levenberg Marquadtprefix="out_": the prefix for each file generated.

For this example we just do a scan of 8 values in each direction for 20 iterations:

[7]:

prepare_files(original_model, scan_interval=8, iterations=20, data_dir=data_dir)

[7]:

{'num_params': 7,

'num_data': 500,

'obj': 12.501488024131111,

'param_sds': {'(R1).k2': 5.433042366390387,

'(R2).k1': 0.5087112193172452,

'Values[offset].InitialValue': 0.01143802470807778}}

Processing the files:

Here we use the python multiprocessing pool, to launch CopasiSE to process all the files we have generated in the data directory we process 4 files at the same time, but you can supply a pool_size parameter to change that. This step can be skipped, if you copy the files to a cluster environment and run them there.

To specify a specific CopasiSE version to use you can pass it along the module’s COPASI_SE field. For example like so:

basico.task_profile_likelihood.COPASI_SE = '/opt/COPASI/COPASI-4.38.268-Linux-64bit/bin/CopasiSE'

alternatively, process_dir also accepts the following named arguments:

copasi_se: if you dont want to change the global default for CopasiSE, but just pass it along heremax_time: normally, we’d let the model run to completion, but if you specify a max time in seconds, then the computation will be stopped after that time.

so lets runt the files we have generated above, and restrict each run to 10 seconds:

[8]:

process_dir(data_dir, max_time=10)

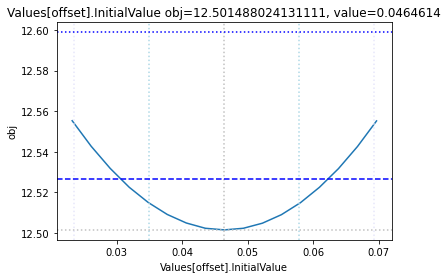

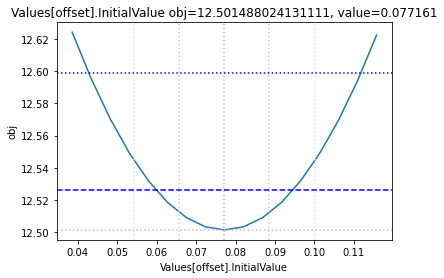

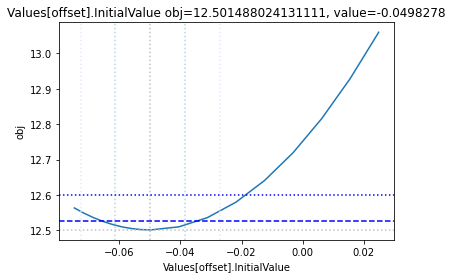

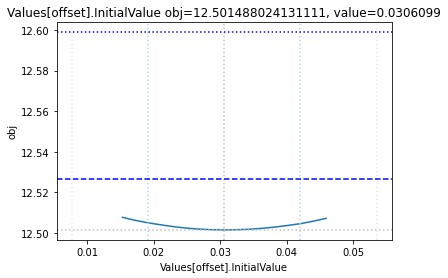

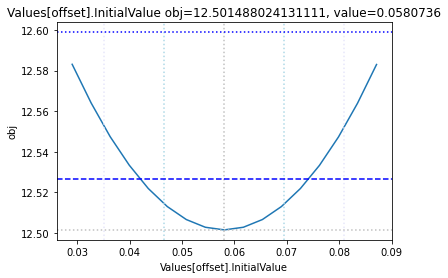

Looking at the results

The plot_data function reads all the report files from the data_dir, and combines the scans from both directions to one plot.

[9]:

plots = plot_data(data_dir)

and finally, let us remove the temporary files:

[10]:

#import shutil

#shutil.rmtree(data_dir, ignore_errors=True)

Everything together

Lets use a second example, this time we recreate the model from Schabers publication from scratch, and then run the parameter estimation using a convenience method:

[11]:

from basico import *

from basico.task_profile_likelihood import get_profiles_for_model

[12]:

new_model(name='Schaber Example', notes="""

The example from the supplement, originally described in

Schaber, J. and Klipp, E. (2011) Model-based inference of biochemical parameters and

dynamic properties of microbial signal transduction networks, Curr Opin Biotechnol, 22, 109-

116 (https://doi.org/10.1016/j.copbio.2010.09.014)

""");

[13]:

add_function('mod. MA',

'k * S * T',

'irreversible',

mapping={'S': 'modifier', 'T': 'substrate'}

);

[14]:

add_reaction('v1', 'T -> Tp; S', function='mod. MA')

add_reaction('v2', 'Tp -> T')

add_reaction('decay', 'S ->', mapping={'k1': 0.5})

get_reactions()[['scheme', 'function', 'mapping']]

[14]:

| scheme | function | mapping | |

|---|---|---|---|

| name | |||

| v1 | T -> Tp; S | mod. MA | {'k': 0.1, 'S': 'S', 'T': 'T'} |

| v2 | Tp -> T | Mass action (irreversible) | {'k1': 0.1, 'substrate': 'Tp'} |

| decay | S -> | Mass action (irreversible) | {'k1': 0.5, 'substrate': 'S'} |

[15]:

set_species('Tp', initial_concentration=0)

# observable

add_species('TpFit', status='assignment', expression='{[Tp]}/0.5')

get_species()[['initial_concentration']]

[15]:

| initial_concentration | |

|---|---|

| name | |

| S | 1.0 |

| T | 1.0 |

| Tp | 0.0 |

| TpFit | 0.0 |

[16]:

add_experiment('Data set 1', pd.DataFrame(data = {

'Time': [1, 2, 4, 6],

'[TpFit]': [1, 0.88, 0.39, 0.22],

#'sd': [0.09, 0.09, 0.09, 0.09]

}

));

[17]:

set_fit_parameters([

{'name': '(v1).k', 'lower': 0, 'upper': 100},

{'name': '(v2).k1', 'lower': 0, 'upper': 100},

])

sol = run_parameter_estimation(method=PE.HOOKE_JEEVES, update_model=True)

sol[['sol']]

[17]:

| sol | |

|---|---|

| name | |

| (v1).k | 2.267945 |

| (v2).k1 | 1.425208 |

[18]:

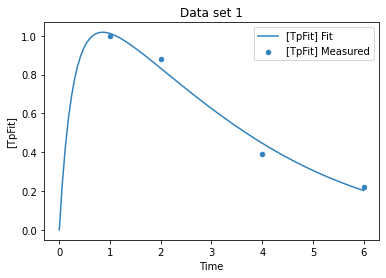

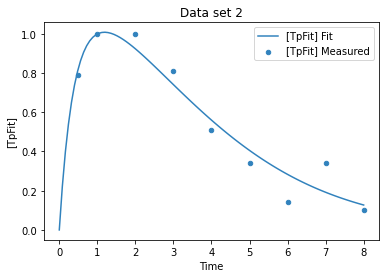

plot_per_experiment(solution=sol);

while the fit looks ok, lets have a look at the likelyhood profile

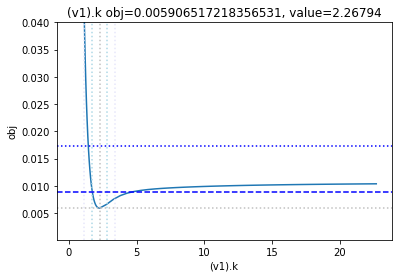

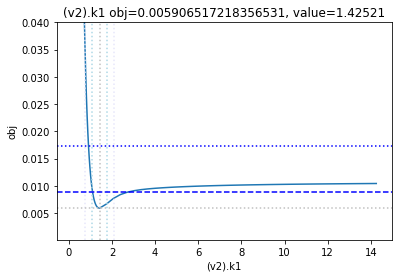

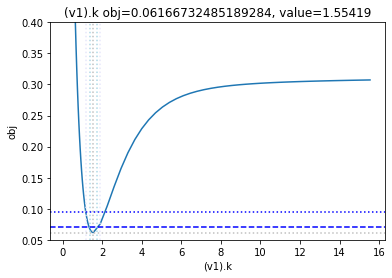

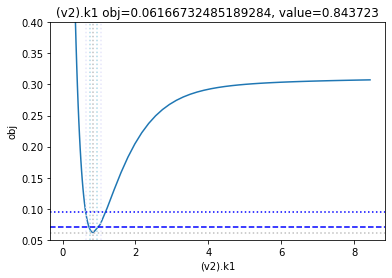

the utility method get_profiles_for_model combines all of the above and runs the profile likelihood for the currently loaded model and fit. You can supply all the arguments of prepare_files and process_dir. By defaut get_profiles_for_model will use a temporary directory, and remove the files afterwards. If you would like the files to stay on your disc, you can supply a data_dir, in which all files will be generated, and reports placed.

We additionally use the scale_mode parameter, that allows to limit the y_axis to desired limits.

[19]:

get_profiles_for_model(lower_adjustment=0.1, upper_adjustment=10, scale_mode={'top': 0.04, 'bottom': 0.0001});

as the result from the publication suggests, the parameter is not identifiable. No lets the run the second dataset as well:

[20]:

remove_experiments()

add_experiment('Data set 2',pd.DataFrame(data = {

'Time': [0.5, 1, 2, 3, 4, 5, 6, 7, 8],

'[TpFit]': [0.79, 1, 1, 0.81, 0.51, 0.34, 0.14, 0.34, 0.1],

#'sd': [0.1,0.1,0.1,0.1,0.1,0.1,0.1,0.1,0.1]

}))

sol = run_parameter_estimation(method=PE.HOOKE_JEEVES, update_model=True)

sol[['sol']]

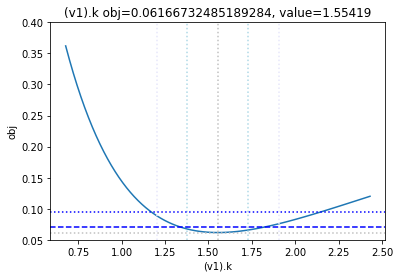

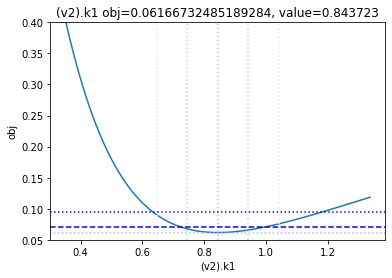

[20]:

| sol | |

|---|---|

| name | |

| (v1).k | 1.554192 |

| (v2).k1 | 0.843723 |

[21]:

plot_per_experiment(solution=sol);

[22]:

get_profiles_for_model(lower_adjustment=0.1, upper_adjustment=10, scale_mode={'top': 0.4, 'bottom': 0.05});

looking at the profile we find what we want to see.

A new option added, is to generate the profiles for a certain number of standard deviations as calculated by the COPASI fit. Lets try this here, by going to the ranges of -5 / +5 standard deviations from the value found:

[23]:

get_profiles_for_model(lower_adjustment="-5SD", upper_adjustment="+5SD", scale_mode={'top': 0.4, 'bottom': 0.05});